A case study in collaboration that brings social science, subject-matter expertise, and technology together to move beyond prediction — toward AI-enabled decision support and prescriptive insight.

**********

A Missing Link: Turning Scattered Insights Into a Unified Lens

Human trafficking is one of the most intractable human rights challenges of our time. Despite decades of effort and courageous frontline work, the field has struggled with a persistent gap: the inability to see, with clarity and scale, how systemic factors like housing, migration, and economic policy shape vulnerability and where interventions can truly change trajectories.

That challenge extends far beyond trafficking. Across social sectors, decisions are often siloed by discipline, geography, or funding stream; research, data, and program evaluations exist, but remain scattered, incompatible, or incomplete.

How do we turn scattered data into a shared understanding that drives action?

This case study of a partnership among lived experience, subject-matter, and technology experts offers one possible answer — a demonstration of how responsible AI, paired with social-science rigor and lived experience, can begin to close that gap.

Under the Hood: How AI and Social Science Meet

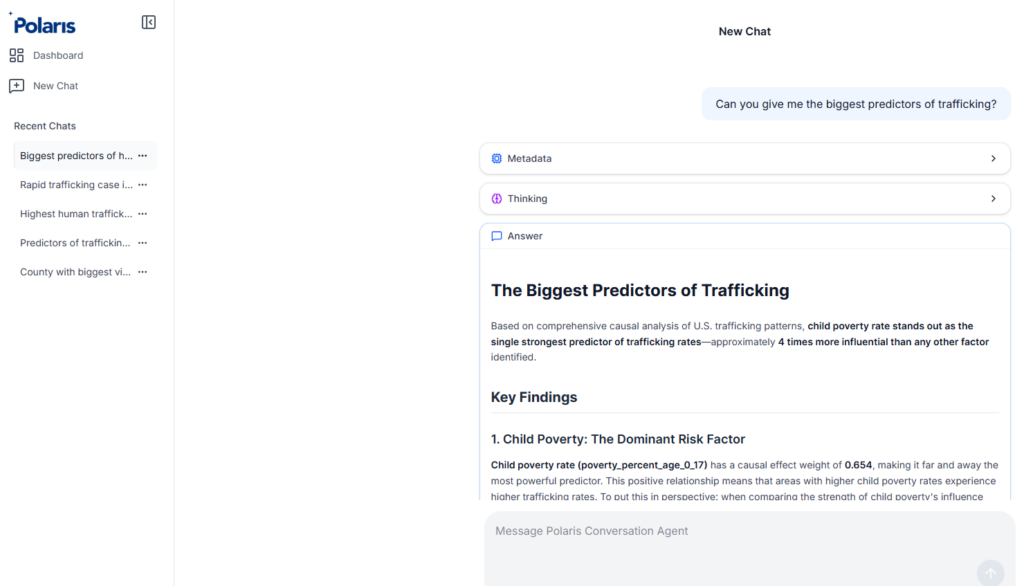

At the heart of this work is a tool demonstrating how AI and social science disciplines can converge to offer a structured approach to identifying the factors most predictive of risk at a population level, or how policies and investments can steer those trajectories toward prevention.

Built collaboratively by Polaris, CML Insight, and Limbik, the prototype reflects the integration of deep social-sector expertise, advanced modeling capabilities, and a shared commitment to ethical and responsible innovation. Polaris brought more than two decades of experience operating the National Human Trafficking Hotline and supporting survivors. Technology firms CML Insight and Limbik contributed their expertise in causal and agentic AI and supported the compilation of trusted open-source data sets. The Patrick J. McGovern Foundation, with a commitment to responsible AI experimentation in the nonprofit sector, provided the seed funding.

Here’s what powers the tool:

- Trusted, multi-source datasets drawn from both national and community-level sources, including the U.S. Census, Bureau of Labor Statistics, Centers for Disease Control, a decade of aggregated hotline insights, along with specialized health, justice, and rural well-being indices at the county level.

- Layered modeling approach integrating predictive analytics to detect vulnerability patterns with causal AI that explores directional drivers and interdependencies among factors such as poverty, housing, and unemployment.

- Real-world evidence (RWE) extraction embeds insights from peer-reviewed trafficking intervention studies, ensuring the model aligns with proven approaches and identifies areas where new evidence is needed.

- Agentic AI components enable dynamic, bounded exploration of insights, prompting users toward evidence-based inquiry while maintaining strict guardrails through close collaboration between the reasoning and validation agents using the Single Source of Truth data and a human in the loop.

As a first prototype, the tool is already surfacing valuable insights. The next step involves validating these findings, bringing in more subject-matter expertise and trusted datasets, refining the architecture, and incorporating more voices to enhance accuracy and relevance.

Sign up to learn more about human trafficking and how you can help

What the Data Now Tell Us

One early finding stands out from this early prototype: child poverty consistently emerges as the strongest directional predictor of trafficking risk across U.S. counties.

“For years, survivor leaders and frontline practitioners have told us that poverty is one of the deepest roots of exploitation,” said Megan Lundstrom, CEO of Polaris. “Now, this tool has finally provided us with the evidence behind that truth.”

This does not claim a single cause, but it highlights where policy levers have the power to transform lives. It gives practitioners and decision-makers an evidence-based lens through which to prioritize prevention efforts.

Testing Scenarios: When Policy Becomes Prevention

To illustrate this in practice, we conducted a scenario analysis of New Mexico’s pioneering universal childcare policy. The program aims to remove income limits and copays, saving families an average of $12,000 per child per year.

When queried, the model projected a specific increase in median household income in New Mexico and translated impact to state child poverty rate reductions, which in turn reduce trafficking risk — offering a tangible example of how anti-poverty policy can become an anti-trafficking strategy.

It’s a powerful example of how technology, used thoughtfully, can help policymakers quantify what many already know: systemic solutions are the most enduring ones.

For Those New to AI: Why This Matters

For those just beginning to explore tools like ChatGPT, it can be hard to grasp how different AI systems are designed and why that difference matters.

When you ask ChatGPT a question like, “What are the leading causes of human trafficking?”, it draws from vast amounts of text (articles, research papers, and online discussions) and predicts what words are most likely to follow. That makes it remarkably powerful for language-based tasks, such as writing, summarizing, and brainstorming.

But ChatGPT and systems like it are designed to generate and communicate knowledge, not to evaluate evidence. Because they work by predicting patterns in language, they can sometimes “hallucinate,” producing confident answers that sound right but may not be grounded in verified data.

In most contexts, that’s not a flaw; it’s what makes generative AI so powerful and versatile. But in domains like human trafficking, where insights guide policy and funding, we need a different kind of tool; one that reasons from data rather than prose.

The approach described here is built for that purpose. Instead of predicting what sounds plausible, this model is designed to reason transparently from trusted evidence:

- Guided by deep domain expertise — grounded in Polaris’s two decades of frontline experience, along with practitioner and lived-experience insight that shaped the model’s hypotheses and helped identify strong proxy indicators

- Grounded in verified data — drawing from structured public datasets and peer-reviewed research to evaluate relationships using rigorous statistical and analytical methods

- Transparent by design — making visible where insights come from, the strength of relationships, and the limits of both data and model

- Human-in-the-loop oversight — involving social scientists, data experts, and survivor leaders who validate assumptions, challenge outputs, and refine interpretation

- Adaptive but bounded — using reasoning and validation agents that improve over time while remaining anchored to evidence and ethical safeguards

In other words, while ChatGPT excels at communicating knowledge, this system is built to guide decision-making based on rigorous evidence. It doesn’t replace human judgment; it strengthens it by offering clarity, transparency, and evidence that help us weigh trade-offs, test policy scenarios, and invest where change is most likely to take root.

That’s the real promise of purpose-built AI: not to predict or persuade, but to illuminate the evidence behind complex human systems so we can act wisely.

Reimagining Impact: From Fragmented Data to Collective Action

This prototype marks the beginning of a new chapter, moving the field from fragmented data to integrated, actionable insight. More than a decision-support tool within the anti-trafficking field, it builds systems-level understanding needed to dismantle the structures that enable exploitation by showing how poverty, housing, labor, and migration intersect to shape vulnerability, and how decision-makers across sectors can act on shared evidence to drive collective impact.

The next steps will focus on validation, field testing, and expansion to new datasets, in a co-design process with partners across government, service providers, and survivor-led organizations. The goal is not simply to refine a model or tool, but to build a shared framework where evidence drives strategy and AI amplifies human expertise.

This is also an invitation to the broader “tech for good” and social justice communities — from researchers and technologists to funders, policymakers, and corporate partners — to collaborate on creating tools that strengthen human decision-making, rather than replacing it. By combining domain expertise, science, ethics, and innovation, we can build systems that turn knowledge into action and insight into impact.

Stop human trafficking today

Help fix the broken systems that make trafficking possible so we can prevent it from happening in the first place.